作业三中总共有五个问题,前两个问题分别是自己实现一个RNN和LSTM来做图像captioning,问题三是对神经网络的几个可视化,问题四是我们经常看到的风格迁移,最后一个问题是生成对抗网络,下面将对每个问题进行详细的阐述。所有作业的实现都已经上传到GitHub。

Q1: Image Captioning with Vanilla RNNs

在Q1和Q2所用到的训练数据集是Microsoft COCO,其中数据进行了预处理,全部的数据特征都是从VGG-16的fc7层中提取的,VGG网络是在ImageNet数据集上预训练好的。通过VGG网络提取的预处理的特征分别存储在train2014_vgg16_fc7.h5和val2014_vgg16_fc7.h5中。为了在速度和处理时间上节省内存,这里使用PCA对提取的特征进行了降维,将VGG-16提取的4096维度降到了512维,存储在train2014_vgg16_fc7_pca.h5和val2014_vgg16_fc7_pca.h5中。为了便于训练每个单词都有一个ID与其对应,这些映射存储在coco2014_vocab.json。下图为训练的数据集。训练中增加了几个特殊的token,在开始和结束的位置分别加了<START>和<END>标签,不常见的单词用<<UNK>来替代,对于长度比较短的在<END>后面用<NULL>来进行补全。

| <START> a <UNK> bike learning up against the side of a building <END> | <START> a desk and chair with a computer and a lamp <END> |

|

|

RNN的实现代码在cs231n/rnn_layers.py中,其中RNN的主要公式如下:

前向传播

1 | def rnn_step_forward(x, prev_h, Wx, Wh, b): |

反向传播

1 | def rnn_step_backward(dnext_h, cache): |

以上实现的反向传播和前向传播只是针对于当前时刻的。为了训练需要将时序T加入到RNN的前项传播中,加入时序的前向传播和反向传播如下:

1 | def rnn_forward(x, h0, Wx, Wh, b): |

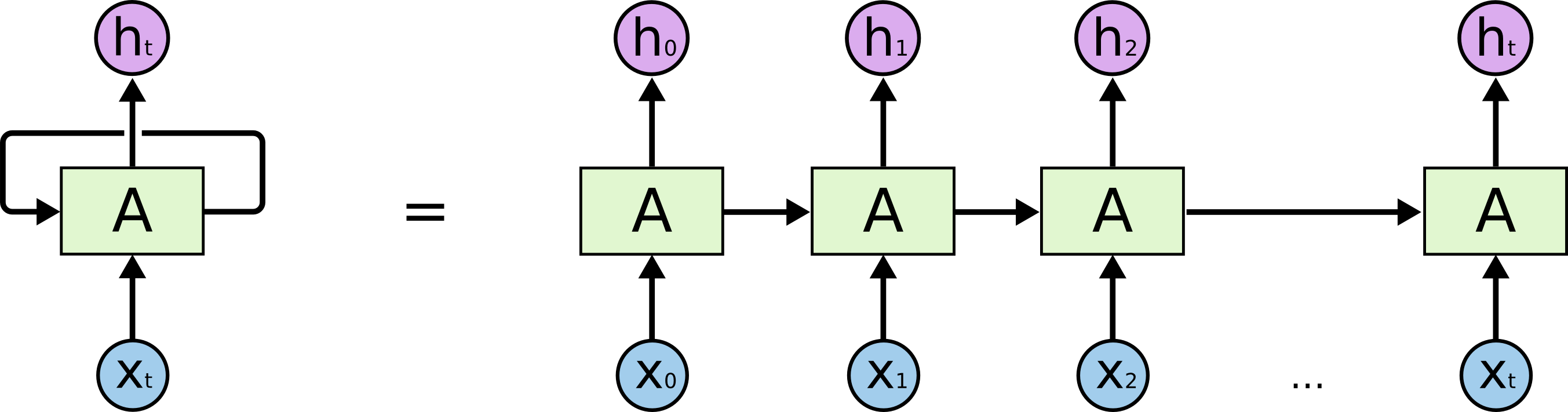

这里需要注意的主要是RNN的反向传播的实现,在反向传播中有下一层传回来的时序的梯度这里是dh,可以看到它的shape大小为(N, T, H)。在前向传播的过程中隐层的状态信息分两条线路,一部分传到下个时刻,一部分传到下一层网络,如图所示:

对于h1来说分两部分,分别传到下层和T2时刻的隐藏层输入,所以在反向传播的时候h1有两部分的梯度一部分是下一层的,实现的时候为dh[:, t, :],一部分为下一个时刻的为dprev_h。同时对于T时刻的状态,dprev_h=0。

对于h1来说分两部分,分别传到下层和T2时刻的隐藏层输入,所以在反向传播的时候h1有两部分的梯度一部分是下一层的,实现的时候为dh[:, t, :],一部分为下一个时刻的为dprev_h。同时对于T时刻的状态,dprev_h=0。

Q2: Image Captioning with LSTMs

LSTM训练所用到的数据集同RNN相同,LSTM同RNN相同,在每个时刻接受一个输入和上一层的隐藏层的状态,LSTM也保持着之前的状态在cell state $c_{t-1}\in\mathbb{R}^H$ 。 在LSTM中可以学习的参数有两个一个是input-to-hidden 的权重 $W_x\in\mathbb{R}^{4H\times D}$, 另一个是 hidden-to-hidden 的权重 $W_h\in\mathbb{R}^{4H\times H}$ 还有一个 bias vector $b\in\mathbb{R}^{4H}$。这边具体的描述直接copy的代码页的描述不做翻译了。

At each timestep we first compute an activation vector $a\in\mathbb{R}^{4H}$ as $a=W_xx_t + W_hh_{t-1}+b$. We then divide this into four vectors $a_i,a_f,a_o,a_g\in\mathbb{R}^H$ where $a_i$ consists of the first $H$ elements of $a$, $a_f$ is the next $H$ elements of $a$, etc. We then compute the input gate $g\in\mathbb{R}^H$, forget gate $f\in\mathbb{R}^H$, output gate $o\in\mathbb{R}^H$ and block input $g\in\mathbb{R}^H$ as

$$

i = \sigma(a_i) \hspace{4pc} f = \sigma(a_f) \hspace{4pc} o = \sigma(a_o) \hspace{4pc} g = \tanh(a_g)

$$

where $\sigma$ is the sigmoid function and $\tanh$ is the hyperbolic tangent, both applied elementwise.

Finally we compute the next cell state $c_t$ and next hidden state $h_t$ as

$$

c_{t} = f\odot c_{t-1} + i\odot g \hspace{4pc}

h_t = o\odot\tanh(c_t)

$$

where $\odot$ is the elementwise product of vectors.

In the rest of the notebook we will implement the LSTM update rule and apply it to the image captioning task.

In the code, we assume that data is stored in batches so that $X_t \in \mathbb{R}^{N\times D}$, and will work with transposed versions of the parameters: $W_x \in \mathbb{R}^{D \times 4H}$, $W_h \in \mathbb{R}^{H\times 4H}$ so that activations $A \in \mathbb{R}^{N\times 4H}$ can be computed efficiently as $A = X_t W_x + H_{t-1} W_h$

1 | def lstm_step_forward(x, prev_h, prev_c, Wx, Wh, b): |